- Invitation Status

- Posting Speed

- 1-3 posts per day

- Writing Levels

- Give-No-Fucks

- Adaptable

- Preferred Character Gender

- Genres

- Romance, Furry, Military, scifi

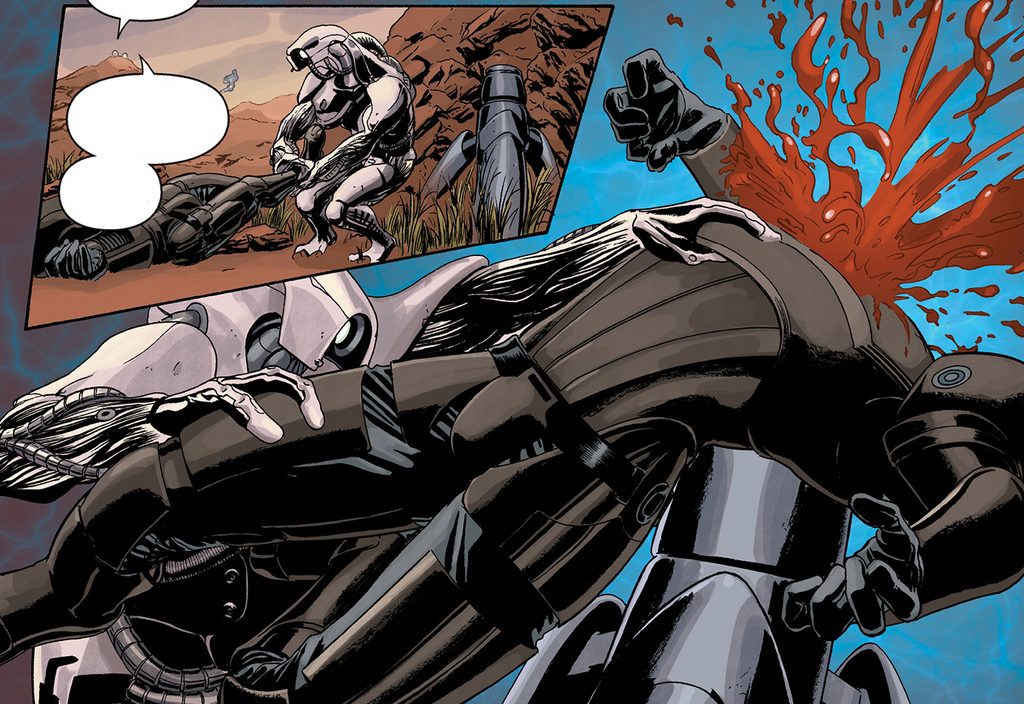

Ever since the creation of the first weapon with the power to kill in one shot, man has been on a collision course with doomsday. As we speak the world's biggest military powers are trying to create the next generation in weapons, completely autonomous weapons. For those of you that already see what I'm getting at, and probably saw the Terminator movies, you already know what's to come. From my vast research, I have come to the conclusion that within the next two decades the US, Japan, and other high tech nations with the capability to create artificial intelligence, we will have weapons that will not be guided in any way by humans. These weapons will be completely self controlled. And you know what it will target? Us. That's right. It will view us as the threat because just as in the movie Terminator it will see us as not only its creator but also its greatest threat. It will see us realizing our mistake and trying to shut it down. As it sees us trying to do so, it will begin attacking the people trying to take it down. If you think I am just crazy and I don't know what I'm talking about, go ahead and laugh. For years I have been a conspiracy theorist and I have been able to piece together things not many people would think would happen. I have been able to use cause and effect on a much higher level. Using cause and effect I have determined that we will be spelling our own demise as we create these weapons. Google has an AI that is constantly learning. And you know why? So that eventually the US military can take it for it's own and use it in weapons like drones, missiles, which we already are getting ahead in, and also machines like what has already been developed. For those of you that saw the youtube video of that machine that was completely autonomous, you will see we are already on our way to destruction by our own creations. Below is a link to that video.

http://www.newsy.com/videos/google-atlas-robot-kind-of-emulates-karate-kid-move/

I was unable to find the actual youtube videos but this is what I saw. Now imagine seeing this armed and armored. This could be the first machine that will lead the way to the extermination of the human race. I have always seen this coming ever since I started getting into computers. I have known that with the advancements we are making in technology, we will eventually create weapons that will not need human guidance. They will be able to fight our wars. But as I said earlier, they will see us as a threat and determine that we are no longer needed and will do all it can to kill us. For those that don't believe what I am saying, heed my warning, if this is not stopped, we will all be doomed. We will all be slaughtered like sheep.

http://www.newsy.com/videos/google-atlas-robot-kind-of-emulates-karate-kid-move/

I was unable to find the actual youtube videos but this is what I saw. Now imagine seeing this armed and armored. This could be the first machine that will lead the way to the extermination of the human race. I have always seen this coming ever since I started getting into computers. I have known that with the advancements we are making in technology, we will eventually create weapons that will not need human guidance. They will be able to fight our wars. But as I said earlier, they will see us as a threat and determine that we are no longer needed and will do all it can to kill us. For those that don't believe what I am saying, heed my warning, if this is not stopped, we will all be doomed. We will all be slaughtered like sheep.